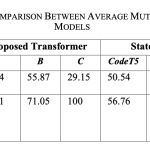

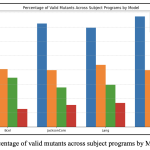

Abstract— For mutation testing (MT) to be both cost-effective and an accurate measure of test suite adequacy, injected faults should resemble real-world bug patterns. However, traditional mutation tools that apply predefined mutation operators (MO) across all applicable code locations, results in a large number of mutants and led to high MT cost. Our previous work demonstrated that pre-trained transformers like CodeT5 can be fine-tuned on a bug-fix dataset to generate mutants for MT. Nonetheless, invalid mutants, including those with syntax errors or code identical to the original, remain prevalent. To address this issue, we propose a new transformer architecture that emphasizes local context within different parts of the input code while preserving the focus on global code context. Experimental results show that the proposed Transformer A generates 21.95% more valid mutants and achieves a 4.5-point higher average character n-gram F-score (CHRF) score than CodeT5, based on evaluation using a bug-fix test dataset. In addition, the low Jensen-Shannon (JS) divergence (0.008) between the generated mutation patterns and real-world bug patterns indicates that Transformer A produces mutations with diversity comparable to real-world bugs.

Keywords—mutation testing, cost, code mutation, transformer

Yik, L. Z., bin Wan Kadir, W. M. N., & binti Ibrahim, N. (2023, August). A Systematic Literature Review on Solutions of Mutation Testing Problems. In 2023 IEEE 8th International Conference On Software Engineering and Computer Systems (ICSECS) (pp. 64-71). IEEE.

Loh, Z. Y., Kadir, W. M. N. W., & Ibrahim, N. (2024). A Comparative Evaluation of Transformers in Seq2Seq Code Mutation: Non-Pre-trained Vs. Pre-trained Variants. Journal of Advanced Research Design, 123(1), 45-65.

Towards Valid Mutants with Realistic Faults: A New Transformer Architecture (to be published in scopus-indexed conference)

PyTorch, Numpy, Pandas